Difference between revisions of "Testing Tool (Specification)"

m (→Examples) |

(→Config file) |

||

| Line 20: | Line 20: | ||

==== Config file ==== | ==== Config file ==== | ||

| − | For each target in a configuration file you may define a testing folder in which test classes | + | For each target in a configuration file you may define a testing folder in which test classes will be created. Other files needed for testing can be put there as well. It could be included as a special type of cluster, so classes in that folder will be compiled. |

| − | {{Note| special testing folder | + | Proposal: '''/"location_of_ecf_file"/testing/"target_name"''' |

| + | |||

| + | {{Note| Some cases require special testing folder when automatically creating new test cases (e.g. in a writable library, since it might use classes which are not visible from the library). The test executor could use that folder to place log files. Also system level tests rely on a location for files (such as text files containing the expected output).}} | ||

==== Additional information ==== | ==== Additional information ==== | ||

Revision as of 14:28, 11 June 2008

Contents

Main functionalities

Add unit/system level tests

Semantically there is no difference between unit tests and system level tests. This way all tests can be written in Eiffel in a conforming way.

A test is a routine having the prefix test in a class inheriting from TEST_SET. In general features in classes specifically used for testing should be exported at most to {TESTING_CLASS}. This is to prevent testing code from remaining in a finalized system. If you write a helper class for your test routines, let it inherit from TESTING_CLASS (Note: TEST_SET already inherits from TESTING_CLASS). Additionally you should make leaf test sets frozen and make sure you never directly reference testing classes in your project code.

System level test specifics

Since system level testing often relies on external items like files, SYSTEM_LEVEL_TEST_SET provides a number of helper routines accessing them.

Config file

For each target in a configuration file you may define a testing folder in which test classes will be created. Other files needed for testing can be put there as well. It could be included as a special type of cluster, so classes in that folder will be compiled.

Proposal: /"location_of_ecf_file"/testing/"target_name"

![]() Note: Some cases require special testing folder when automatically creating new test cases (e.g. in a writable library, since it might use classes which are not visible from the library). The test executor could use that folder to place log files. Also system level tests rely on a location for files (such as text files containing the expected output).

Note: Some cases require special testing folder when automatically creating new test cases (e.g. in a writable library, since it might use classes which are not visible from the library). The test executor could use that folder to place log files. Also system level tests rely on a location for files (such as text files containing the expected output).

Additional information

The indexing clause can be used to specify which classes and routines are tested by the test routine. Any specifications in the class indexing clause will apply to all tests in that class. Note testing_covers in the following examples.

Examples

Example unit tests test_append and test_boolean

frozen class TEST_STRING inherit TEST_SET redefine set_up end feature {NONE} -- Initialization set_up do create s.make (10) end feature {TESTER} -- Access s: STRING feature {TESTER} -- Test routines test_append indexing testing: "covers.STRING.append" require set_up: s /= Void and then s.is_empty do s.append ("12345") assert_string_equality ("append", s, "12345") end test_boolean indexing testing_covers: "covers.STRING.is_boolean, covers.STRING.to_boolean" require set_up: s /= Void and then s.is_empty do s.append ("True") assert_true ("boolean", s.is_boolean and then s.to_boolean) end end

Example system level test test_version (Note: SYSTEM_LEVEL_TEST_SET inherits from TEST_SET and provides basic functionality for executing external commands, including the system currently under development):

indexing testing_covers: "all" frozen class TEST_MY_APP inherit SYSTEM_LEVEL_TEST_SET feature {TESTER} -- Test routines test_version do run_system_with_args ("--version") assert_string_equality ("version", last_output, "my_app version 0.1") end end

Manage and run test suite

The tool should have an own icon for displaying test cases (test routines). In this example it is a Lego block. Especially for views like list all tests for this routine, it is important to see the difference between the actual routine and its tests. Also the tool has more of a vertical layout. Since the number of tests is comparable to the number of classes in the system, it makes sense the tools have the same layout. Also it allows to have tabs in the bottom for displaying further information, such as execution details (output, call stack, etc.).

The menu bar includes following buttons:

- Create new manual test case (opens wizard)

- if test class is dropped on button, the wizard will suggest to create new test in that class

- if normal class (or feature) is dropped on button, wizard will suggest to create test for the class (or feature)

- Menu for generating new test (defaults to last chosen one?)

- if normal class/feature is dropped on button, generate tests for that class/feature

- Menu for executing tests in background (defaults to last chosen one?)

- if any class/feature is dropped on button, run tests associated with class/feature

- Run test in debugger (must have a test selected or dropped on button to start)

- Stop any execution (background or debugger)

- Opens settings dialog for testing

- Status indicating how many tests we have ran so far and

- how many failing ones there are

View defines in which way the test cases are listed (see below).

Filter can be used to type keywords for showing only test cases having tags including the keywords (see below). It's a drop down so predefined filter patterns can be used (such as outcome.fail).

The grid contains a tree view of all test cases (test cases are always in leaves). Multiples columns for more information. Currently there are two indications whether a test fails or not (column and icons). Obviously it only needs one - they are both shown just to see the difference. The advantage with using icons is that less space is needed. Coloring the background of a row containing a failing test case would be an option as well.

Tags

Each test can have a number of tags. Tags can be a single string or hierarchically structured with dots ('.'). For example, a test with tag covers.STRING.append means that this test is a regression test for {STRING}.append. There are a number of implicit tags for each test, such like the name tag ({TEST_STRING}.test_append has the implicit tag name.TEST_STRING.test_append).

Different views

Based on the notion of tags, we are able to define different views. The default view Test sets simply shows a hierarchical tree for every name.X tag. This enables us to define more views, such as Class tested, which displays every covers.X tag. Note that with other tags than name. some tests might get listed multiple times where other not containing such a tag must be listed explicitly. The main advantage is that the user can define his own views based on any type of tags.

Running tests

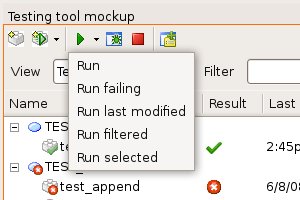

The run menu provides different options for running tests in the background:

- Run all tests in system

- Run currently failing ones

- Run test for classes last modified (better description needed here)

- Only run tests shown below

- Only run tests which are selected below

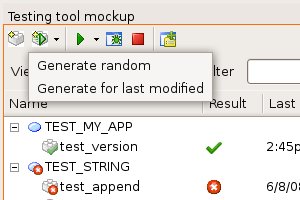

Generate tests automatically

The generate menu lets you generate new tests for all classes in system (randomly picked?) or for classes which where last modified.

Extract tests from a running application

This is the a simple example of an extracted test case (note that EXTRACTED_TEST_SET inherits from TEST_SET and implements all functionality for executing an extracted test).

class TEST_STRING_001 inherit EXTRACTED_TEST_SET feature {NONE} -- Initialization set_up_routine is -- <Precursor> do routine_under_test := agent {STRING}.append_integer end feature {TESTER} -- Test routines test_append_integer is -- Call `routine_under_test' with input provided by `context'. indexing tag: "covers.STRING.append_integer" do call_routine_under_test end feature {NONE} -- Access context: ARRAY [TUPLE [id: STRING; type: STRING; inv: BOOLEAN; attributes: ARRAY [STRING]]] is -- <Precursor> once Result := << ["#operand", "TUPLE [STRING, INTEGER]", True, << "#2", "110" >>], ["#2", "STRING", True, << "this is an integer: " >>] >> end end -- class TEST_STRING_001

EXTRACTED_TEST_SET implements set_up (frozen), but has a deferred feature set_up_routine which assigns the proper agent to routine_under_test. This basically replaces the missing reflection functionality for calling features. context is also deferred in EXTACTED_TEST_SET and contains all data from the heap and call stack which was reachable by the routine at extraction time. Each TUPLE represents an object, where `inv' defines whether the object should fulfill its invariant or not (if the object was on the stack at extraction time, this does not have to be the case).

This design lets us have the entire test in one file. This is practical especially in the situation where a user should submit such a test as a bug report after experiencing a crash.

The drawback currently is that the design only allows us to have one test per class. The reason for that is mainly the set_up procedure. Creating all objects in context must be done during set_up. If there is a failure, the set_up will be blamed instead of the actual test routine, which makes the test not fail but invalid. This can happen e.g. if one of the objects in the context does not fulfill its invariant, which again could result from simply editing the class being tested. Any suggestions welcome!

Background test execution

Open questions

(This section should disappear as the questions get answered.)