Difference between revisions of "Testing Tool (Specification)"

(Screenshots for testing tool with first comments) |

m (→Manage and run test suite) |

||

| (30 intermediate revisions by 4 users not shown) | |||

| Line 10: | Line 10: | ||

Semantically there is no difference between unit tests and system level tests. This way all tests can be written in Eiffel in a conforming way. | Semantically there is no difference between unit tests and system level tests. This way all tests can be written in Eiffel in a conforming way. | ||

| − | A test is a routine | + | A test is a arbitrary routine in a class inheriting from '''EQA_TEST_SET''' (the routine must be exported to '''ANY'''). |

| − | ==== System level test specifics ==== | + | ==== System level test specifics (not yet implemented) ==== |

| − | Since system level testing often relies on external items like files, ''' | + | Since system level testing often relies on external items like files, '''SYSTEM_LEVEL_EQA_TEST_SET''' provides a number of helper routines accessing them. These classes will probably have to be in an special testing library, since they also make use of other libraries such as the process library. |

| + | ==== Location of tests (config file) ==== | ||

| − | + | Tests can be located in any cluster of the system. In addition one can define test specific clusters through in the .ecf file. These clusters do not need to exists for the project to compile. This allows one to have library tests but being able to deliver the library without including the test suite. | |

| + | For all classes in the test clusters, the inheritance structure will be evaluated so test classes inheriting from EQA_TEST_SET can be found. For any class belonging to a normal cluster, it will have to be reachable from root class to be compiled and detected as a test class. This means the recommended practice is to put test classes into a test cluster, but it is not a rule. | ||

| − | + | {{Note| Not all classes in a test cluster have to be classes containing tests. One example are helper classes.}} | |

| − | + | Test clusters are also needed to provide a location for test generation/extraction. | |

==== Additional information ==== | ==== Additional information ==== | ||

| − | The indexing clause can be used to specify which classes and routines are tested by the test routine. Any specifications in the class indexing clause will apply to all tests in that class. Note ''' | + | The indexing clause can be used to specify which classes and routines are tested by the test routine. Any specifications in the class indexing clause will apply to all tests in that class. Note the '''covers/''' tag in the following examples. |

| Line 34: | Line 36: | ||

<eiffel> | <eiffel> | ||

| − | frozen class | + | frozen class STRING_TESTS |

inherit | inherit | ||

| − | + | EQA_TEST_SET | |

redefine | redefine | ||

set_up | set_up | ||

| Line 50: | Line 52: | ||

end | end | ||

| − | feature { | + | feature {TESTER} -- Access |

s: STRING | s: STRING | ||

| − | feature { | + | feature {TESTER} -- Test routines |

test_append | test_append | ||

| − | + | note | |

| − | + | testing: "covers/{STRING}.append, platform/os/winxp" | |

require | require | ||

set_up: s /= Void and then s.is_empty | set_up: s /= Void and then s.is_empty | ||

do | do | ||

s.append ("12345") | s.append ("12345") | ||

| − | + | assert ("append", s.is_equal ("12345") | |

end | end | ||

test_boolean | test_boolean | ||

| − | + | note | |

| − | + | testing: "covers/{STRING}.is_boolean, covers/{STRING}.to_boolean" | |

require | require | ||

set_up: s /= Void and then s.is_empty | set_up: s /= Void and then s.is_empty | ||

do | do | ||

s.append ("True") | s.append ("True") | ||

| − | + | assert ("boolean", s.is_boolean and then s.to_boolean) | |

end | end | ||

| Line 81: | Line 83: | ||

| − | Example system level test '''test_version''' (Note: ''' | + | Example system level test '''test_version''' (Note: '''EQA_SYSTEM_LEVEL_TEST_SET''' inherits from '''EQA_TEST_SET''' and provides basic functionality for executing external commands, including the system currently under development): |

<eiffel> | <eiffel> | ||

| Line 92: | Line 94: | ||

inherit | inherit | ||

| − | + | EQA_SYSTEM_LEVEL_TEST_SET | |

| − | feature { | + | feature {TESTER} -- Test routines |

test_version | test_version | ||

| + | note | ||

| + | testing: "platform/os/linux/x86" | ||

do | do | ||

run_system_with_args ("--version") | run_system_with_args ("--version") | ||

| − | assert_string_equality ("version", last_output, "my_app version 0.1") | + | assert_string_equality ("version", last_output, "my_app version 0.1 - linux x86") |

end | end | ||

| Line 108: | Line 112: | ||

=== Manage and run test suite === | === Manage and run test suite === | ||

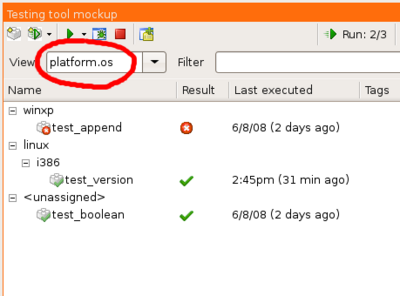

| − | + | [[Image:testing_set-view.png|right|400px|thumb|Standard view listing existing test sets and the tests they contain]] | |

| + | [[Image:testing_cut-view.png|right|400px|thumb|Predefined ''Class tested'' view listing classes/features of the system together with the associated tests (Note: since test_boolean is tagged to cover multiple features, it also appears multiple times in the view)]] | ||

| + | [[Image:testing_user-view.png|right|400px|thumb|User defined view (by simply typing part of the tag), where the tool creates a view based on how the tests are tagged (see Examples above)]] | ||

| − | + | The tool should have an own icon for displaying test cases (test routines). In this example it is a Lego block. Especially for views like ''list all tests for this routine'', it is important to see the difference between the actual routine and its tests. Also the tool has more of a vertical layout. Since the number of tests is comparable to the number of classes in the system, it makes sense the tools have the same layout. Also it allows to have tabs in the bottom for displaying further information, such as execution details (output, call stack, etc.). | |

| − | + | ||

| − | + | ||

| − | The tool should have an own icon for displaying test cases (test routines). In this example it is a Lego block. Especially for views like ''list all tests for this routine | + | |

The '''menu bar''' includes following buttons: | The '''menu bar''' includes following buttons: | ||

| Line 134: | Line 137: | ||

'''View''' defines in which way the test cases are listed (see below). | '''View''' defines in which way the test cases are listed (see below). | ||

| − | '''Filter''' can be used to type keywords for showing only test cases having tags including the keywords. It's a drop down so predefined filter patterns can be used (such as ''outcome.fail'') | + | '''Filter''' can be used to type keywords for showing only test cases having tags including the keywords (see below). It's a drop down so predefined filter patterns can be used (such as ''outcome.fail''). |

| + | |||

| + | The '''grid''' contains a tree view of all test cases (test cases are always in leaves). Multiples columns for more information. Currently there are two indications whether a test fails or not (column and icons). Obviously it only needs one - they are both shown just to see the difference. The advantage with using icons is that less space is needed. Coloring the background of a row containing a failing test case would be an option as well. | ||

| + | |||

| + | ==== Tags ==== | ||

| + | |||

| + | Each test can have a number of tags. Tags can be a single string or hierarchically structured with slashes ('/'). For example, a test with tag ''covers/{STRING}.append'' means that this test is a regression test for {STRING}.append. There are a number of implicit tags for each test, such like the ''class'' tag ({STRING_TESTS}.test_append has the implicit tag ''class/{STRING_TESTS}.test_append''). | ||

| + | |||

| + | {{Note|Tags are defined as strings, but in the view we sometimes want a tag to represent a class, or a feature. The way this is done right now is that the view basically knows if the tag starts with "covers/" it is followed by a class and feature name. Another approach would be to define such tags like this: "covers.{CLASS_NAME}.feature_name". This would allow user defined tags to have clickable nodes in the view. We could also introduces other special tags such like dates/times.}} | ||

| + | |||

| + | You can create your own tags. Such as for the Eweasel tagging, you might have following tags in your note clause | ||

| + | <eiffel> | ||

| + | test_somthing | ||

| + | -- Test something | ||

| + | note | ||

| + | testing: "eweasel/pass, eweasel/tcf" | ||

| + | do | ||

| + | -- test something | ||

| + | end | ||

| + | </eiffel> | ||

| + | Just to keep things structured if at some point you have Eweasel tests and unit tests in the same project. | ||

| + | |||

| + | Please make sure the test suite always has the latest version of the note clause by refreshing after something changed (refresh button in the AutoTest tool). | ||

| + | |||

| + | |||

| + | ==== Different views ==== | ||

| + | |||

| + | Based on the notion of tags, we are able to define different views. The default view ''Test sets'' simply shows a hierarchical tree for every ''name.X'' tag. This enables us to define more views, such as ''Class tested'', which displays every ''covers.X'' tag. Note that with other tags than ''name.'' some tests might get listed multiple times where other not containing such a tag must be listed explicitly. The main advantage is that the user can define his own views based on any type of tags. | ||

| + | |||

| + | |||

| + | {{Note|The tools should support multiple selection. This is important for executing a number of selected test routines, showing passed execution results, etc. Also when selecting a e.g. class node it should execute all leaves below that node.}} | ||

| − | |||

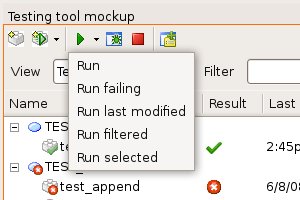

==== Running tests ==== | ==== Running tests ==== | ||

[[Image:testing_run-menu.jpg]] | [[Image:testing_run-menu.jpg]] | ||

| − | + | The '''run''' menu provides different options for running tests in the background: | |

| − | + | * Run all tests in system | |

| + | * Run currently failing ones | ||

| + | * Run test for classes last modified (better description needed here) | ||

| + | * Only run tests shown below | ||

| + | * Only run tests which are selected below | ||

| + | |||

| + | |||

| + | {{Note|We should have two different views for displaying testing history. One structured by test sessions (list of test execution containing all test routines for each session) and one listing recent executions for a single test routine.}} | ||

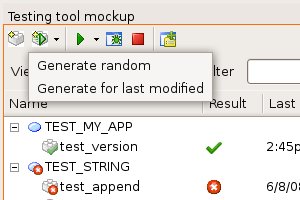

=== Generate tests automatically === | === Generate tests automatically === | ||

| Line 149: | Line 188: | ||

[[Image:testing_generate-menu.jpg]] | [[Image:testing_generate-menu.jpg]] | ||

| − | === | + | The '''generate''' menu lets you generate new tests for all classes in system (randomly picked?) or for classes which where last modified. |

| + | |||

| + | === Extract tests from a running application === | ||

| + | |||

| + | |||

| + | This is the a simple example of an extracted test case (note that '''EQA_EXTRACTED_TEST_SET''' inherits from '''EQA_TEST_SET''' and implements all functionality for executing an extracted test). | ||

| + | |||

| + | <eiffel> | ||

| + | indexing | ||

| + | testing: "type/extracted" | ||

| + | |||

| + | class | ||

| + | STRING_TESTS_001 | ||

| + | |||

| + | inherit | ||

| + | |||

| + | EQA_EXTRACTED_TEST_SET | ||

| + | |||

| + | feature {TESTER} -- Test routines | ||

| + | |||

| + | test_append_integer | ||

| + | -- Call `routine_under_test' with input provided by `context'. | ||

| + | note | ||

| + | testing: "covers/{STRING}.append_integer" | ||

| + | do | ||

| + | run_extracted_test (agent {STRING}.append_integer, ["#1", 100]) | ||

| + | end | ||

| + | |||

| + | feature {NONE} -- Access | ||

| + | |||

| + | context: !ARRAY [!TUPLE [type: !TYPE [ANY]; attributes: !TUPLE; inv: BOOLEAN]] | ||

| + | -- <Precursor> | ||

| + | once | ||

| + | Result := << | ||

| + | [{STRING}, [ | ||

| + | "this is an integer: " | ||

| + | ], False] | ||

| + | >> | ||

| + | end | ||

| + | |||

| + | end -- class STRING_TESTS_001 | ||

| + | |||

| + | </eiffel> | ||

| + | |||

| + | |||

| + | Each test routines passes the agent of the routine to be tested, along with a tuple containing the arguments (#x refering to objects in `context'). This basically replaces the missing reflection functionality for calling features. '''context''' is also deferred in '''EQA_EXTACTED_TEST_SET''' and contains all data from the heap and call stack which was reachable by the routine at extraction time. Each TUPLE represents an object, where `inv' defines whether the object should fulfill its invariant or not (if the object was on the stack at extraction time, this does not have to be the case). | ||

| + | |||

| + | |||

| + | This design lets us have the entire test in one file. This is practical especially in the situation where a user should submit such a test as a bug report after experiencing a crash. | ||

| + | The reason for that is mainly the set_up procedure. Creating all objects in '''context''' must be done during set_up. If there is a failure, the set_up will be blamed instead of the actual test routine, which makes the test not fail but invalid. This can happen e.g. if one of the objects in the context does not fulfill its invariant, which again could result from simply editing the class being tested. Any suggestions welcome! | ||

=== Background test execution === | === Background test execution === | ||

== Open questions == | == Open questions == | ||

(This section should disappear as the questions get answered.) | (This section should disappear as the questions get answered.) | ||

| + | |||

| + | == Wish list == | ||

| + | |||

| + | If you have any suggestions or ideas which would improve the testing tool, please add them to this section. | ||

| + | |||

| + | === tests with context === | ||

| + | it would be nice to have contextual test cases. | ||

| + | For instance you would create a test class | ||

| + | and you create an associated "test point" (kind of breakpoint, or like aspect programming) | ||

| + | and then, when you run the execution in "testing mode" (under the debugger, or testing tool) | ||

| + | whenever you reach this "test point", it would trigger the associated test case. | ||

| + | I agree, it is not anymore automatic testing, since you need to make sure the execution goes by this "test point", | ||

| + | but this would be useful to launch specific test with a valid context. | ||

| + | |||

| + | === jUnit compatible output format === | ||

| + | It would be nice if the testing tool could generate a jUnit compatible output which can then be processed by other tools like a continuous build system. Of course this makes it necessary for the testing tool to be available from the command line. | ||

| + | |||

| + | === Compatibility with Getest === | ||

| + | Since Gobo test has been available long before Eiffel test, it would be nice if the testing tool also supported GOBO test cases. This is probably really easy to do since the difference are minimal. Basically only that gobo test cases require a creation procedure. It would also be a good start if EiffelTest would still recognize test cases when they list a creation procedure(s) as long as default_create is listed. | ||

== See also == | == See also == | ||

Latest revision as of 19:06, 30 December 2009

Contents

Main functionalities

Add unit/system level tests

Semantically there is no difference between unit tests and system level tests. This way all tests can be written in Eiffel in a conforming way.

A test is a arbitrary routine in a class inheriting from EQA_TEST_SET (the routine must be exported to ANY).

System level test specifics (not yet implemented)

Since system level testing often relies on external items like files, SYSTEM_LEVEL_EQA_TEST_SET provides a number of helper routines accessing them. These classes will probably have to be in an special testing library, since they also make use of other libraries such as the process library.

Location of tests (config file)

Tests can be located in any cluster of the system. In addition one can define test specific clusters through in the .ecf file. These clusters do not need to exists for the project to compile. This allows one to have library tests but being able to deliver the library without including the test suite. For all classes in the test clusters, the inheritance structure will be evaluated so test classes inheriting from EQA_TEST_SET can be found. For any class belonging to a normal cluster, it will have to be reachable from root class to be compiled and detected as a test class. This means the recommended practice is to put test classes into a test cluster, but it is not a rule.

![]() Note: Not all classes in a test cluster have to be classes containing tests. One example are helper classes.

Note: Not all classes in a test cluster have to be classes containing tests. One example are helper classes.

Test clusters are also needed to provide a location for test generation/extraction.

Additional information

The indexing clause can be used to specify which classes and routines are tested by the test routine. Any specifications in the class indexing clause will apply to all tests in that class. Note the covers/ tag in the following examples.

Examples

Example unit tests test_append and test_boolean

frozen class STRING_TESTS inherit EQA_TEST_SET redefine set_up end feature {NONE} -- Initialization set_up do create s.make (10) end feature {TESTER} -- Access s: STRING feature {TESTER} -- Test routines test_append note testing: "covers/{STRING}.append, platform/os/winxp" require set_up: s /= Void and then s.is_empty do s.append ("12345") assert ("append", s.is_equal ("12345") end test_boolean note testing: "covers/{STRING}.is_boolean, covers/{STRING}.to_boolean" require set_up: s /= Void and then s.is_empty do s.append ("True") assert ("boolean", s.is_boolean and then s.to_boolean) end end

Example system level test test_version (Note: EQA_SYSTEM_LEVEL_TEST_SET inherits from EQA_TEST_SET and provides basic functionality for executing external commands, including the system currently under development):

indexing testing_covers: "all" frozen class TEST_MY_APP inherit EQA_SYSTEM_LEVEL_TEST_SET feature {TESTER} -- Test routines test_version note testing: "platform/os/linux/x86" do run_system_with_args ("--version") assert_string_equality ("version", last_output, "my_app version 0.1 - linux x86") end end

Manage and run test suite

The tool should have an own icon for displaying test cases (test routines). In this example it is a Lego block. Especially for views like list all tests for this routine, it is important to see the difference between the actual routine and its tests. Also the tool has more of a vertical layout. Since the number of tests is comparable to the number of classes in the system, it makes sense the tools have the same layout. Also it allows to have tabs in the bottom for displaying further information, such as execution details (output, call stack, etc.).

The menu bar includes following buttons:

- Create new manual test case (opens wizard)

- if test class is dropped on button, the wizard will suggest to create new test in that class

- if normal class (or feature) is dropped on button, wizard will suggest to create test for the class (or feature)

- Menu for generating new test (defaults to last chosen one?)

- if normal class/feature is dropped on button, generate tests for that class/feature

- Menu for executing tests in background (defaults to last chosen one?)

- if any class/feature is dropped on button, run tests associated with class/feature

- Run test in debugger (must have a test selected or dropped on button to start)

- Stop any execution (background or debugger)

- Opens settings dialog for testing

- Status indicating how many tests we have ran so far and

- how many failing ones there are

View defines in which way the test cases are listed (see below).

Filter can be used to type keywords for showing only test cases having tags including the keywords (see below). It's a drop down so predefined filter patterns can be used (such as outcome.fail).

The grid contains a tree view of all test cases (test cases are always in leaves). Multiples columns for more information. Currently there are two indications whether a test fails or not (column and icons). Obviously it only needs one - they are both shown just to see the difference. The advantage with using icons is that less space is needed. Coloring the background of a row containing a failing test case would be an option as well.

Tags

Each test can have a number of tags. Tags can be a single string or hierarchically structured with slashes ('/'). For example, a test with tag covers/{STRING}.append means that this test is a regression test for {STRING}.append. There are a number of implicit tags for each test, such like the class tag ({STRING_TESTS}.test_append has the implicit tag class/{STRING_TESTS}.test_append).

![]() Note: Tags are defined as strings, but in the view we sometimes want a tag to represent a class, or a feature. The way this is done right now is that the view basically knows if the tag starts with "covers/" it is followed by a class and feature name. Another approach would be to define such tags like this: "covers.{CLASS_NAME}.feature_name". This would allow user defined tags to have clickable nodes in the view. We could also introduces other special tags such like dates/times.

Note: Tags are defined as strings, but in the view we sometimes want a tag to represent a class, or a feature. The way this is done right now is that the view basically knows if the tag starts with "covers/" it is followed by a class and feature name. Another approach would be to define such tags like this: "covers.{CLASS_NAME}.feature_name". This would allow user defined tags to have clickable nodes in the view. We could also introduces other special tags such like dates/times.

You can create your own tags. Such as for the Eweasel tagging, you might have following tags in your note clause

test_somthing -- Test something note testing: "eweasel/pass, eweasel/tcf" do -- test something end

Just to keep things structured if at some point you have Eweasel tests and unit tests in the same project.

Please make sure the test suite always has the latest version of the note clause by refreshing after something changed (refresh button in the AutoTest tool).

Different views

Based on the notion of tags, we are able to define different views. The default view Test sets simply shows a hierarchical tree for every name.X tag. This enables us to define more views, such as Class tested, which displays every covers.X tag. Note that with other tags than name. some tests might get listed multiple times where other not containing such a tag must be listed explicitly. The main advantage is that the user can define his own views based on any type of tags.

![]() Note: The tools should support multiple selection. This is important for executing a number of selected test routines, showing passed execution results, etc. Also when selecting a e.g. class node it should execute all leaves below that node.

Note: The tools should support multiple selection. This is important for executing a number of selected test routines, showing passed execution results, etc. Also when selecting a e.g. class node it should execute all leaves below that node.

Running tests

The run menu provides different options for running tests in the background:

- Run all tests in system

- Run currently failing ones

- Run test for classes last modified (better description needed here)

- Only run tests shown below

- Only run tests which are selected below

![]() Note: We should have two different views for displaying testing history. One structured by test sessions (list of test execution containing all test routines for each session) and one listing recent executions for a single test routine.

Note: We should have two different views for displaying testing history. One structured by test sessions (list of test execution containing all test routines for each session) and one listing recent executions for a single test routine.

Generate tests automatically

The generate menu lets you generate new tests for all classes in system (randomly picked?) or for classes which where last modified.

Extract tests from a running application

This is the a simple example of an extracted test case (note that EQA_EXTRACTED_TEST_SET inherits from EQA_TEST_SET and implements all functionality for executing an extracted test).

indexing testing: "type/extracted" class STRING_TESTS_001 inherit EQA_EXTRACTED_TEST_SET feature {TESTER} -- Test routines test_append_integer -- Call `routine_under_test' with input provided by `context'. note testing: "covers/{STRING}.append_integer" do run_extracted_test (agent {STRING}.append_integer, ["#1", 100]) end feature {NONE} -- Access context: !ARRAY [!TUPLE [type: !TYPE [ANY]; attributes: !TUPLE; inv: BOOLEAN]] -- <Precursor> once Result := << [{STRING}, [ "this is an integer: " ], False] >> end end -- class STRING_TESTS_001

Each test routines passes the agent of the routine to be tested, along with a tuple containing the arguments (#x refering to objects in `context'). This basically replaces the missing reflection functionality for calling features. context is also deferred in EQA_EXTACTED_TEST_SET and contains all data from the heap and call stack which was reachable by the routine at extraction time. Each TUPLE represents an object, where `inv' defines whether the object should fulfill its invariant or not (if the object was on the stack at extraction time, this does not have to be the case).

This design lets us have the entire test in one file. This is practical especially in the situation where a user should submit such a test as a bug report after experiencing a crash.

The reason for that is mainly the set_up procedure. Creating all objects in context must be done during set_up. If there is a failure, the set_up will be blamed instead of the actual test routine, which makes the test not fail but invalid. This can happen e.g. if one of the objects in the context does not fulfill its invariant, which again could result from simply editing the class being tested. Any suggestions welcome!

Background test execution

Open questions

(This section should disappear as the questions get answered.)

Wish list

If you have any suggestions or ideas which would improve the testing tool, please add them to this section.

tests with context

it would be nice to have contextual test cases. For instance you would create a test class and you create an associated "test point" (kind of breakpoint, or like aspect programming) and then, when you run the execution in "testing mode" (under the debugger, or testing tool) whenever you reach this "test point", it would trigger the associated test case. I agree, it is not anymore automatic testing, since you need to make sure the execution goes by this "test point", but this would be useful to launch specific test with a valid context.

jUnit compatible output format

It would be nice if the testing tool could generate a jUnit compatible output which can then be processed by other tools like a continuous build system. Of course this makes it necessary for the testing tool to be available from the command line.

Compatibility with Getest

Since Gobo test has been available long before Eiffel test, it would be nice if the testing tool also supported GOBO test cases. This is probably really easy to do since the difference are minimal. Basically only that gobo test cases require a creation procedure. It would also be a good start if EiffelTest would still recognize test cases when they list a creation procedure(s) as long as default_create is listed.