Difference between revisions of "Design of Roundtrip Parser"

(→Staff token linked list) |

(→Switch between roundtrip and non-roundtrip mode) |

||

| Line 201: | Line 201: | ||

Until now, all the information are stored for later use by the parser. | Until now, all the information are stored for later use by the parser. | ||

| − | == Switch between roundtrip and non-roundtrip mode == | + | === Switch between roundtrip and non-roundtrip mode === |

| − | + | The involvement of the roundtrip function will definitely slow down the parsing process because we more time is spent in creating new objects, copying text and maintaining the token linked-list. To minimize the performance overhead, the Eiffel parser is designed to be able to work on either roundtrip mode or non-roundtrip mode. | |

| − | + | All the things described above is roundtrip mode. In roundtrip mode, all work related to source code preserving is done, and at the end of parsing, we get two things: | |

| + | (1) An AST node annotated with token indexes in token linked-list | ||

| + | (2) A token linked-list in which every token literal is stored | ||

| − | + | The major performance penalty (compared with non roundtrip parser) are: | |

| + | (1) creating of AST nodes to represent keywords, | ||

| + | (2) maintaining the token linked-list, such as copying text, inserting items. | ||

| + | All the modifications are conducted through ast_factory to ensure when we want to switch between roundtrip mode and non-roundtrip mode, we only need to do: | ||

| + | |||

| + | 1. give the parser relative AST factory. | ||

| + | |||

| + | 2. set case_sensitive to True (in roundtrip mode) or to False (in non-roundtrip mode). | ||

=Yet another design= | =Yet another design= | ||

Revision as of 06:26, 8 August 2007

Contents

Introduction to roundtrip parser

Source code transformation has a lot of applications, within which, refactoring and pretty-printing are included. Often, the basic function needed by a code transformation is to regenerate source code from abstract syntax tree (AST). For example, in a feature renaming refactoring, except for the names of the feature to be renamed, all other code formatting should be preserved, e.g., indentation, letter case, comments.

Originally, AST doesn't need to store tokens, for their abstract information is implicitly included in non-terminal AST nodes. In order to perform the above code transformation, we need to modify the parser to remember all the formatting information as well to enable regenerating the source code back, hence the name, roundtrip parser.

How to remember comments, separators and text

To make roundtrip code regeneration possible, the following things have to be maintained in AST nodes so when those AST nodes are processed later, correct formatting information can be retrieved:

- Case information of identifiers such as class/feature/local names

- Case information of keywords

- Comments

- Spaces such as normal space, tab, newline characters. We define that comment and separator are called break

For identifiers, it is easy to store case information of identifiers into AST nodes as identifiers are represented as a node in AST.

For keywords, because originally, there is no AST nodes representing them, one easy solution is to add some keyword nodes into AST. For example, in AST node for if statement, some keyword sub nodes can be added for keyword "if", "then", "end". Keywords can only appear at fixed locations in an AST node, so there is no ambiguity of their order. By fixed location, I mean, for example, in an AST node for if statement, the keyword "if" can only appear as the first token, the "end" keyword can only appear as the last token.

For comments and spaces, it's even tricker for two reasons:

- They are not representable in original AST nodes. This prevents the way we deal with keywords from being used here.

- Their appearances are not in a normal form. for example, in a if statement, you can use a single space as delimiter between the "if" keyword, the conditional expression and the "then" keyword, but you can also use any combination of space, tab, newline as delimiters. Another example is that between two statement, there can be a comment, or not.

So, how to attach break information into AST nodes? A popular method is to attach break information to the AST node appear just before (this kind of break is called a trailing break) or after (this kind of break is called a leading break) it. For example, in the following code, comments are attached to the AST node that appears just after:

feature -- Access item: STRING

comment `-- Access’ will be attached to token `item’. This is not only inappropriate but also makes it very difficult to extract information like “What is the tag for this feature clause?”.

And in the following example, comments are attached to AST nodes that appear just before:

statement1

-- Comment 1

statement2

-- Comment 2

"-- Comment 1" is attached to the AST node representing statement1, and "-- Comment 2" is attached to the AST node representing statment2. This method can be applied to any kind of break, i.e., we can consider that the newline character after statement1 and the indentation before comment 1 are also attached the AST node of statement1.

One issue of this method is that it may result in intentionally wrong attachments. Consider the follow snippet:

foo is do goo -- Check flag if flag = 3 then ... end end

If we are using a trailing break mechanism, comment `-- Check flag' will be attached to feature `goo'. But this is certainly wrong.

In fact, how to deal with break in AST tree is an open question. Because sometimes it is difficult to tell which AST node should a break be attached to. Here is some words from a parser design:

Comments, newlines, spaces, and indentation in code make it easier for humans to read, but computers just throw these extras away. Virtually every compiler tool just discards comments and whitespace, because they're not really "part of the program" as far as the tool is concerned. The ANTLR C grammar that Jazillian uses is no exception. So we changed the grammar to keep the comments, but rather than have each comment converted to a token (and thus mess up all our other rules), each comment is associated with a particular token in the input. The problem then becomes "what if that token is deleted or changed?"

When a token that's got comments associated with it is deleted or replaced, a new token is chosen (using heuristics to choose a good one) to hold the comments.

The paper "Preserving the Documentary Structure of Source Code in Language-based transformation Tools" by Michael L. Van De Vanter at Sun Laboratories explains why this approach sometimes doesn't work well.

The paper can be found at http://research.sun.com/projects/jackpot/COM.sun.mlvdv.doc.scam_nov01.abs.html

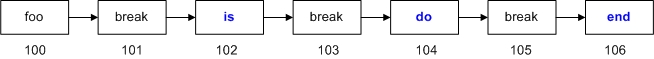

So, there is another way: Store all the tokens (now comments and separators are considered as tokens too) in a linked-list. For code:

foo is -- Comment do end

We have the linked-list:

And store the terminals into AST nodes just like in the above method, but do not store breaks. e.g., store "foo", "item", "is", "do" and "end", but do not store new-line, space, `-- Comment' into AST nodes. Breaks are maintained only in the linked-list. A token in the linked-list can be accessed by its position index. For example, 100 for "foo" and 106 for "end".

Every time when a visitor or a pretty-printer needs to print the source code, it will access to this linked-list to get information. It is easy to spot the location of every single piece of break.

In case that the AST tree hierarchy is static (we don't allow to change part of the AST tree dynamically, just as the parser is for the moment), we only need to use an ARRAYED_LIST and store an integer index of a terminal in non-terminal AST node to save space. For example, for the "do" keyword in the above code snippet, we create an AST node, but, instead of storing the text of the keyword in this AST node, we only store an index of the "do" keyword token which is 104 in the linked-list.

The drawback is time must be spent in searching this long list.

But it is more flexible when we want to do some refactoring.

Implementation details

To implement the roundtrip function described above, we need to modify AST nodes and Eiffel scanner/parser such that:

- Added nodes representing keywords in existing AST nodes

- For every token recognized by the scanner, we insert this token (it can be either a normal token or a break token) into the linked-list. If it's a normal token, we attach the index of it in the linked-list to the related AST node.

Add AST nodes for keywords

We use a class KEYWORD_AS to represent a keyword AST node, and then, for every kind of AST structure which contains keywords, we add keyword attributes. For example, class IF_AS is the AST class for if statement, its sketch is like this:

class IF_AS feature -- Attributes condition: EXPR_AS -- Conditional expression compound: EIFFEL_LIST [INSTRUCTION_AS] -- Compound, i.e., statement list in this if-clause. end

After adding keyword attributes, it will look like this:

class IF_AS feature -- Attributes condition: EXPR_AS -- Conditional expression compound: EIFFEL_LIST [INSTRUCTION_AS] -- Compound, i.e., statement list in this if-clause. if_keyword: KEYWORD_AS -- Keyword AST for "if" then_keyword: KEYWORD_AS -- Keyword AST for "then" end_keyword: KEYWORD_AS -- Keyword AST for "end" end

Staff token linked-list

For every terminal token we meet (including break token), we need to insert it into the token linked-list. Also, we need to attach the index of that token into the AST node. For example, for the following snippet:

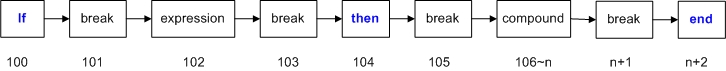

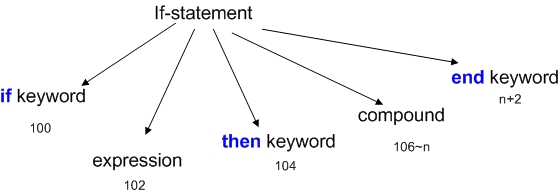

if expression then compound end

We have the token linked-list:

and the AST node for the if statement:

When the above AST for if statement is visited in flavor of code regeneration, we know that the original text of the "if" keyword can be retrieved from the token linked-list at position 100.

In an Eiffel file, separators are defined by:

[ \t\r\n]+

To remember this break information, we add actions to the above regular expressions in eiffel.l (Eiffel lexer description file). For example:

[ \t\r\n]+ { ast_factory.create_break_as (Current) }

This means that whenever separators are met, we create an AST node of type BREAK_AS:

create_break_as (a_scn: EIFFEL_SCANNER) is -- New BREAK_AS node local b_as: BREAK_AS do increase_match_list_count -- Increase token index `match_list_count'. create b_as.make (a_scn.text, a_scn.line, a_scn.column, a_scn.position, a_scn.text_count) -- Create an AST node for the break. b_as.set_index (match_list_count) -- Attach the token index `match_list_count' to the newly created AST node `b_as'. extend_match_list (b_as) -- Insert the AST node `b_as' into the token linked-list. end

For keywords, similar code patterns presents.

We need to do some extra work when matching reserved word once because there are regular expressions embedded in the expression to match once.

The reason to use an ast_factory while not just last_leading_break.append (text) is for roundtrip / non-roundtrip mode switch. e.g., if we are in non-roundtrip mode, ast_factory.append_break does nothing.

Until now, all the information are stored for later use by the parser.

Switch between roundtrip and non-roundtrip mode

The involvement of the roundtrip function will definitely slow down the parsing process because we more time is spent in creating new objects, copying text and maintaining the token linked-list. To minimize the performance overhead, the Eiffel parser is designed to be able to work on either roundtrip mode or non-roundtrip mode.

All the things described above is roundtrip mode. In roundtrip mode, all work related to source code preserving is done, and at the end of parsing, we get two things: (1) An AST node annotated with token indexes in token linked-list (2) A token linked-list in which every token literal is stored

The major performance penalty (compared with non roundtrip parser) are: (1) creating of AST nodes to represent keywords, (2) maintaining the token linked-list, such as copying text, inserting items.

All the modifications are conducted through ast_factory to ensure when we want to switch between roundtrip mode and non-roundtrip mode, we only need to do:

1. give the parser relative AST factory.

2. set case_sensitive to True (in roundtrip mode) or to False (in non-roundtrip mode).

Yet another design

How to store tokens

In this design, we don't attach a break to any token. Instead, we use a a list called `match_list' to store all tokens read, including breaks. In other words, the whole source code is stored in `match_list'.

In AST_FACTORY, we add the following features:

new_match_list (l_size: INTEGER): AST_NODE_LIST extend_match_list (a_list: AST_NODE_LIST; a_match: LEAF_AS)

When the compiler is working on roundtrip mode (which means we give the parse a AST_ROUNDTRIP_FACTORY), it will create a `match_list' before it starts to parse a class, and during parsing, and when a token is read, it will extend the token into this `match_list'. When parsing ends, this `match_list' will be given to the result CLASS_AS object.

How to regenerate code

Use a vistor to regenerate code. This visitor will recursively process the AST tree with the help of `match_list' and index information stored in every non-terminal AST node.

The visitor works like this:

the visitor has some index:

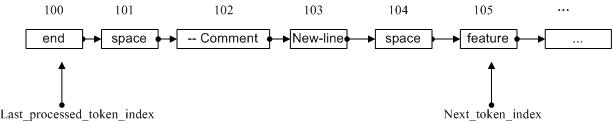

last_processed_token_index, next_token_index

last_processed_token_index shows that last token in `match_list' that has been processed. And `next_token_index' shows the next token that will be processed.

For example:

As the picture shown, the `end' token has been processed, and now we are about to process the `feature' token.

Before we process the `feature' token, the visitor will uses these two index to check if there are some tokens in between these index that have not been processed. If so, the visitor will process those tokens first, and then process the `feature' token. In this way, we will not lost a single token.